The Human Factors Analysis and Classification System—HFACS Cover

and Documentation

Introduction

1. Unsafe Acts

2. Preconditions

for Unsafe Acts

3. Unsafe Supervision

4. Organizational

Influences

Conclusion

References

HFACS and Wildland Fatality Investigations

Hugh Carson wrote this

article a few days after the Cramer Fire

Bill Gabbert wrote this article following the release of the Yarnell Hill Fire ADOSH report

A Roadmap to a Just Culture:

Enhancing the Safety Environment

Cover

and Contents

Forward by James Reason

Executive Summary

1. Introduction

2. Definitions and Principles of a Just Culture

3. Creating a Just Culture

4. Case Studies

5. References

Appendix A. Reporting Systems

Appendix B. Constraints to a Just Reporting Culture

Appendix C. Different Perspectives

Appendix D. Glossary of Acronyms

Appendix E. Report Feedback Form

Rainbow Springs Fire, 1984 — Incident Commander Narration

Introduction

Years Prior

April 25th

Fire Narrative

Lessons Learned

Conclusion

Tools to Identify Lessons Learned

An FAA website presents 3

tools to identify lessons learned from accidents. The site also

includes an animated

illustration of a slightly different 'Swiss-cheese' model called "defenses-in-depth."

|

The Human Factors Analysis and Classification System–HFACS

The “Swiss cheese” model

of accident causation

February 2000

Introduction

Sadly, the annals of aviation history are littered with accidents and

tragic losses. Since the late 1950s, however, the drive to reduce the

accident rate has yielded unprecedented levels of safety to a point where

it is now safer to fly in a commercial airliner than to drive a car or

even walk across a busy New York city street. Still, while the aviation

accident rate has declined tremendously since the first flights nearly

a century ago, the cost of aviation accidents in both lives and dollars

has steadily risen. As a result, the effort to reduce the accident rate

still further has taken on new meaning within both military and civilian

aviation.

Even with all the innovations and improvements realized in the last

several decades, one fundamental question remains generally unanswered:

“Why do aircraft crash?” The answer may not be as straightforward

as one might think. In the early years of aviation, it could reasonably

be said that, more often than not, the aircraft killed the pilot. That

is, the aircraft were intrinsically unforgiving and, relative to their

modern counterparts, mechanically unsafe. However, the modern era of aviation

has witnessed an ironic reversal of sorts. It now appears to some that

the aircrew themselves are more deadly than the aircraft they fly (Mason,

1993; cited in Murray, 1997). In fact, estimates in the literature indicate

that between 70 and 80 percent of aviation accidents can be attributed,

at least in part, to human error (Shappell & Wiegmann, 1996). Still,

to off-handedly attribute accidents solely to aircrew error is like telling

patients they are simply “sick” without examining the underlying

causes or further defining the illness.

So what really constitutes that 70-80 % of human error repeatedly referred

to in the literature? Some would have us believe that human error and

“pilot” error are synonymous. Yet, simply writing off aviation

accidents merely to pilot error is an overly simplistic, if not naive,

approach to accident causation. After all, it is well established that

accidents cannot be attributed to a single cause, or in most instances,

even a single individual (Heinrich, Petersen, and Roos, 1980). In fact,

even the identification of a “primary” cause is fraught with

problems. Rather, aviation accidents are the end result of a number of

causes, only the last of which are the unsafe acts of the aircrew (Reason,

1990; Shappell & Wiegmann, 1997a; Heinrich, Peterson, & Roos,

1980; Bird, 1974).

The challenge for accident investigators and analysts alike is how best

to identify and mitigate the causal sequence of events, in particular

that 70-80 % associated with human error. Armed with this challenge, those

interested in accident causation are left with a growing list of investigative

schemes to chose from. In fact, there are nearly as many approaches to

accident causation as there are those involved in the process (Senders

& Moray, 1991). Nevertheless, a comprehensive framework for identifying

and analyzing human error continues to elude safety professionals and

theorists alike. Consequently, interventions cannot be accurately targeted

at specific human causal factors nor can their effectiveness be objectively

measured and assessed. Instead, safety professionals are left with the

status quo. That is, they are left with interest/fad-driven research resulting

in intervention strategies that peck around the edges of accident causation,

but do little to reduce the overall accident rate. What is needed is a

framework around which a needs-based, data-driven safety program can be

developed (Wiegmann & Shappell, 1997).

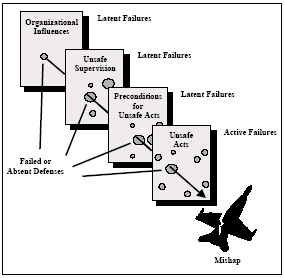

Reason’s “Swiss Cheese” Model of Human Error

One particularly appealing approach to the genesis of human error is

the one proposed by James Reason (1990). Generally referred to as the

“Swiss cheese” model of human error, Reason describes four

levels of human failure, each influencing the next (Figure 1). Working

backwards in time from the accident, the first level depicts those Unsafe

Acts of Operators that ultimately led to the accident[1].

More commonly referred to in aviation as aircrew/pilot error, this level

is where most accident investigations have focused their efforts and consequently,

where most causal factors are uncovered. After all, it is typically the

actions or inactions of aircrew that are directly linked to the accident.

For instance, failing to properly scan the aircraft’s instruments

while in instrument meteorological conditions (IMC) or penetrating IMC

when authorized only for visual meteorological conditions (VMC) may yield

relatively immediate, and potentially grave, consequences. Represented

as “holes” in the cheese, these active failures are typically

the last unsafe acts committed by aircrew.

[1] Reason’s original work involved operators

of a nuclear power plant. However, for the purposes of this manuscript,

the operators here refer to aircrew, maintainers, supervisors and

other humans involved in aviation.

However, what makes the “Swiss cheese” model particularly

useful in accident investigation, is that it forces investigators to address

latent failures within the causal sequence of events as well. As their

name suggests, latent failures, unlike their active counterparts, may

lie dormant or undetected for hours, days, weeks, or even longer, until

one day they adversely affect the unsuspecting aircrew. Consequently,

they may be overlooked by investigators with even the best intentions.

Within this concept of latent failures, Reason described

three more levels of human failure. The first involves the condition of

the aircrew as it affects performance. Referred to as Preconditions

for Unsafe Acts, this level involves conditions such as mental fatigue

and poor communication and coordination practices, often referred to as

crew resource management (CRM). Not surprising, if fatigued aircrew fail

to communicate and coordinate their activities with others in the cockpit

or individuals external to the aircraft (e.g., air traffic control, maintenance,

etc.), poor decisions are made and errors often result.

Figure 1. The “Swiss cheese” model of human error causation

(adapted from Reason, 1990).

But exactly why did communication and coordination break down in the

first place? This is perhaps where Reason’s work departed from more

traditional approaches to human error. In many instances, the breakdown

in good CRM practices can be traced back to instances of Unsafe Supervision,

the third level of human failure. If, for example, two inexperienced (and

perhaps even below average pilots) are paired with each other and sent

on a flight into known adverse weather at night, is anyone really surprised

by a tragic outcome? To make matters worse, if this questionable manning

practice is coupled with the lack of quality CRM training, the potential

for miscommunication and ultimately, aircrew errors, is magnified. In

a sense then, the crew was “set up” for failure as crew coordination

and ultimately performance would be compromised. This is not to lessen

the role played by the aircrew, only that intervention and mitigation

strategies might lie higher within the system.

Reason’s model didn’t stop at the supervisory level either;

the organization itself can impact performance at all levels. For instance,

in times of fiscal austerity, funding is often cut, and as a result, training

and flight time are curtailed. Consequently, supervisors are often left

with no alternative but to task “non-proficient” aviators

with complex tasks. Not surprisingly then, in the absence of good CRM

training, communication and coordination failures will begin to appear

as will a myriad of other preconditions, all of which will affect performance

and elicit aircrew errors. Therefore, it makes sense that, if the accident

rate is going to be reduced beyond current levels, investigators and analysts

alike must examine the accident sequence in its entirety and expand it

beyond the cockpit. Ultimately, causal factors at all levels within the

organization must be addressed if any accident investigation and prevention

system is going to succeed.

In many ways, Reason’s “Swiss cheese” model of accident

causation has revolutionized common views of accident causation. Unfortunately,

however, it is simply a theory with few details on how to apply it in

a real-world setting. In other words, the theory never defines what the

“holes in the cheese” really are, at least within the context

of everyday operations. Ultimately, one needs to know what these system

failures or “holes” are, so that they can be identified during

accident investigations or better yet, detected and corrected before an

accident occurs.

The balance of this paper will attempt to describe the “holes in

the cheese.” However, rather than attempt to define the holes using

esoteric theories with little or no practical applicability, the original

framework (called the Taxonomy of Unsafe Operations) was developed

using over 300 Naval aviation accidents obtained from the U.S. Naval Safety

Center (Shappell & Wiegmann, 1997a). The original taxonomy has since

been refined using input and data from other military (U.S. Army Safety

Center and the U.S. Air Force Safety Center) and civilian organizations

(National Transportation Safety Board and the Federal Aviation Administration).

The result was the development of the Human Factors Analysis and Classification

System (HFACS).

<<< continue

reading HFACS, Unsafe Acts >>>

|